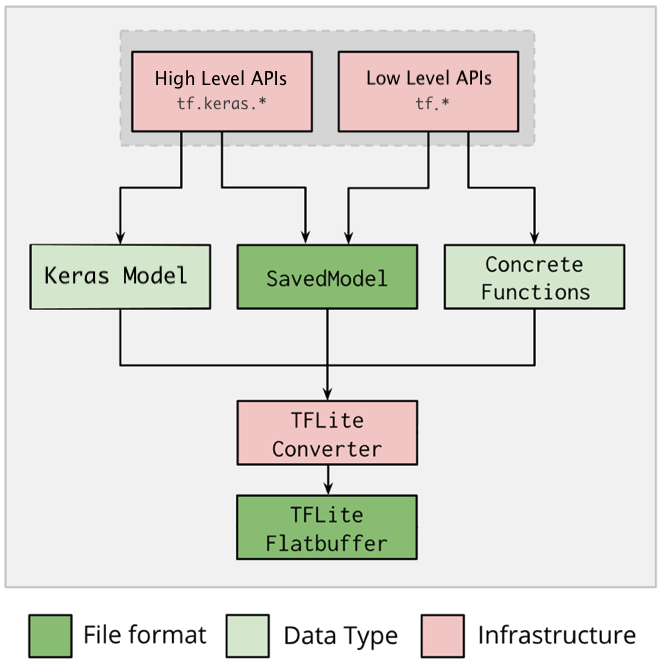

Getting an error when creating the .tflite file · Issue #412 · tensorflow/model-optimization · GitHub

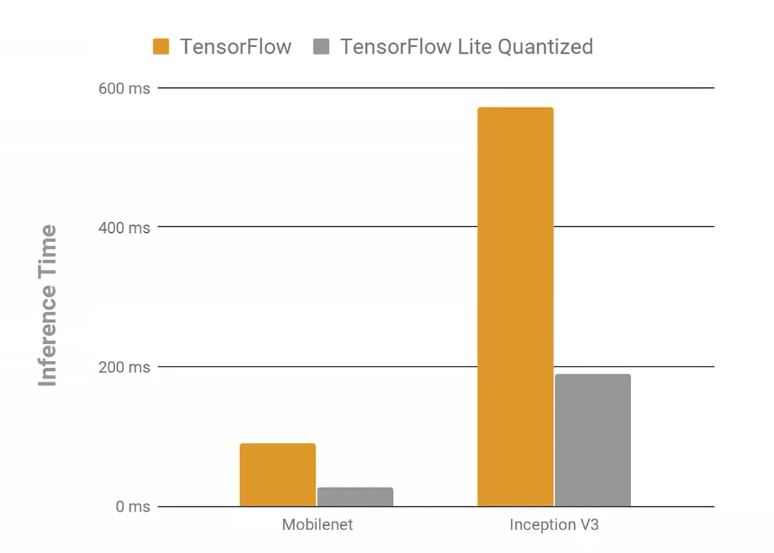

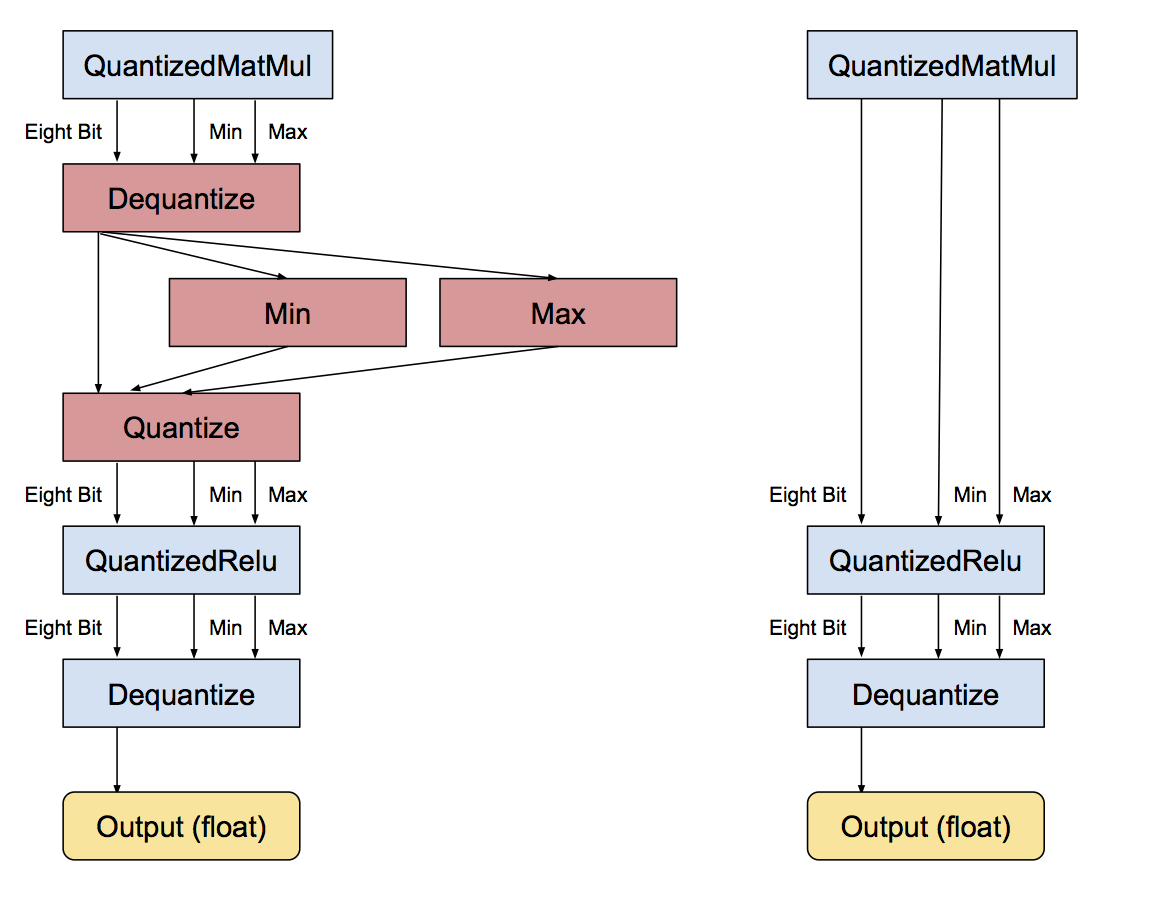

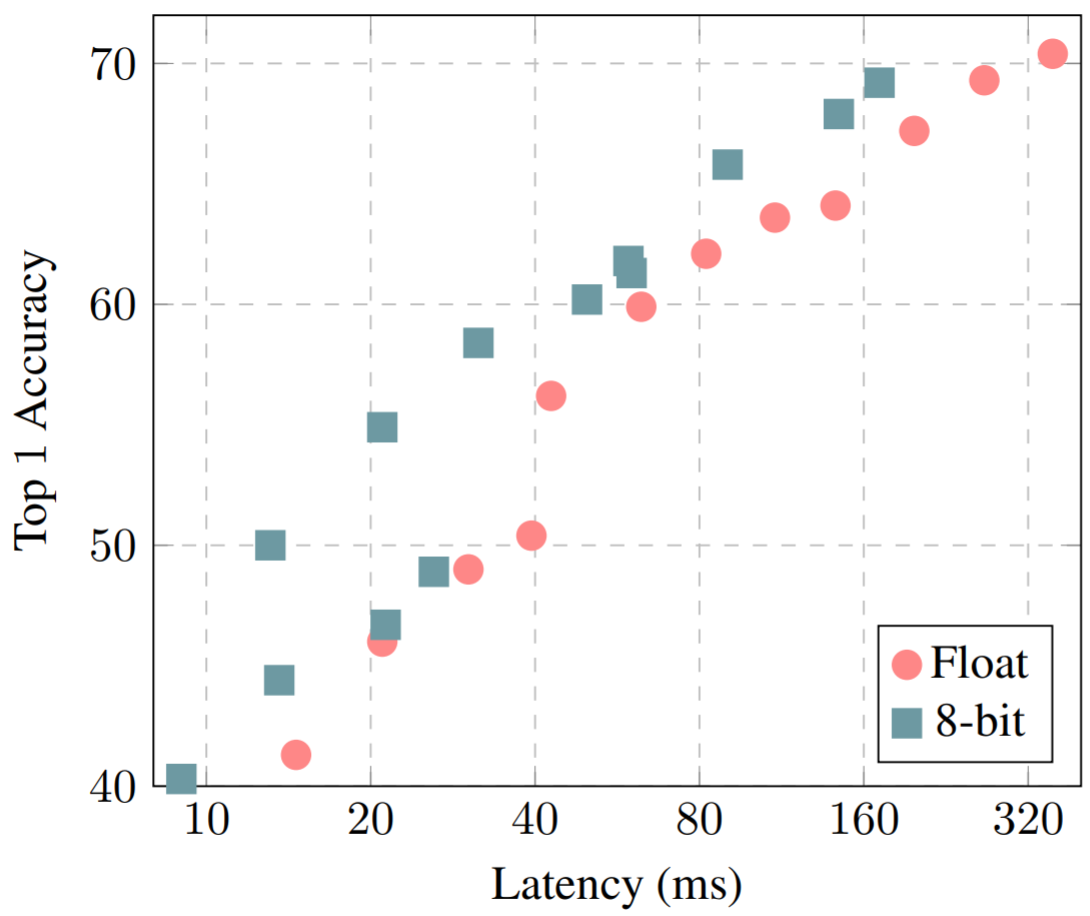

8-Bit Quantization and TensorFlow Lite: Speeding up mobile inference with low precision | by Manas Sahni | Heartbeat

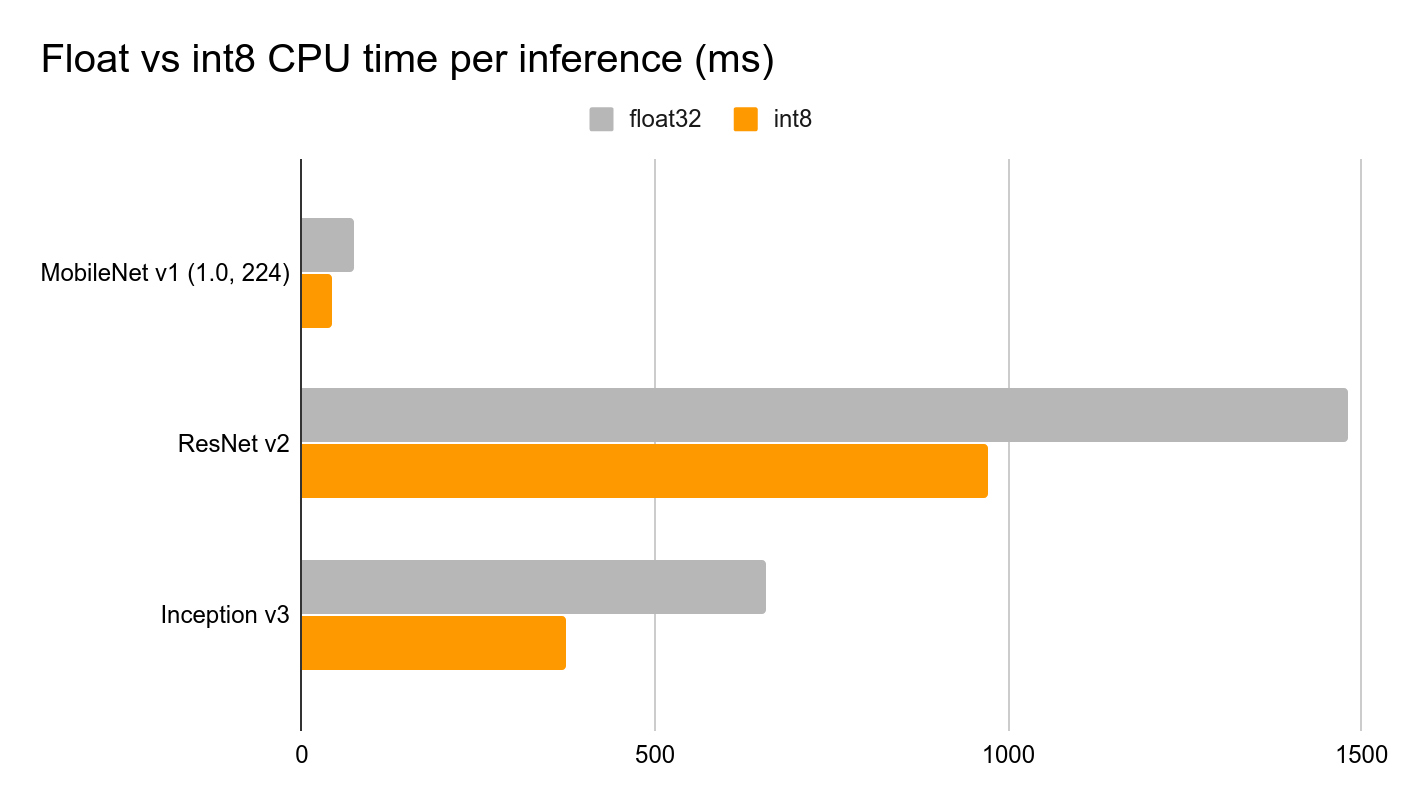

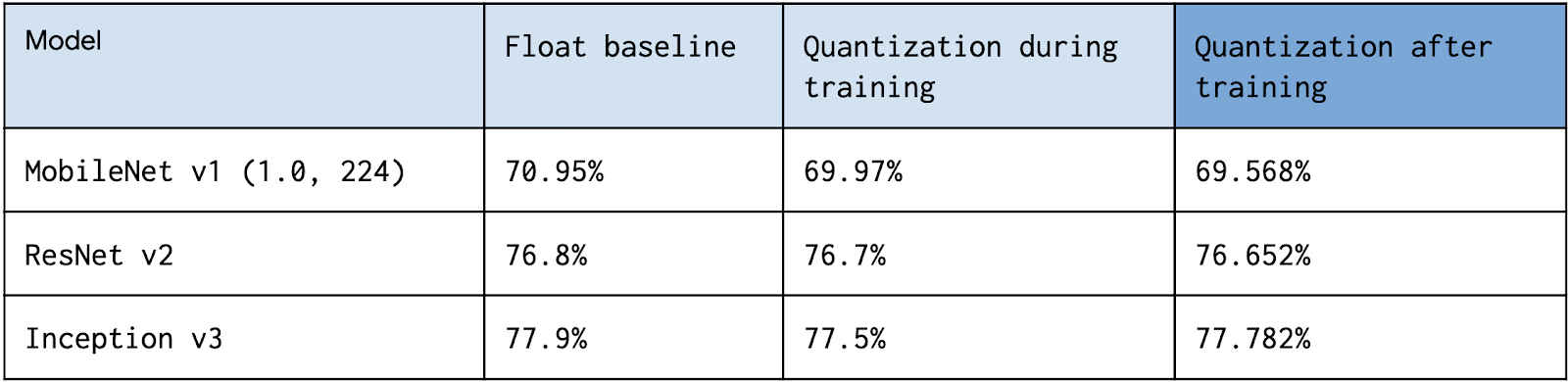

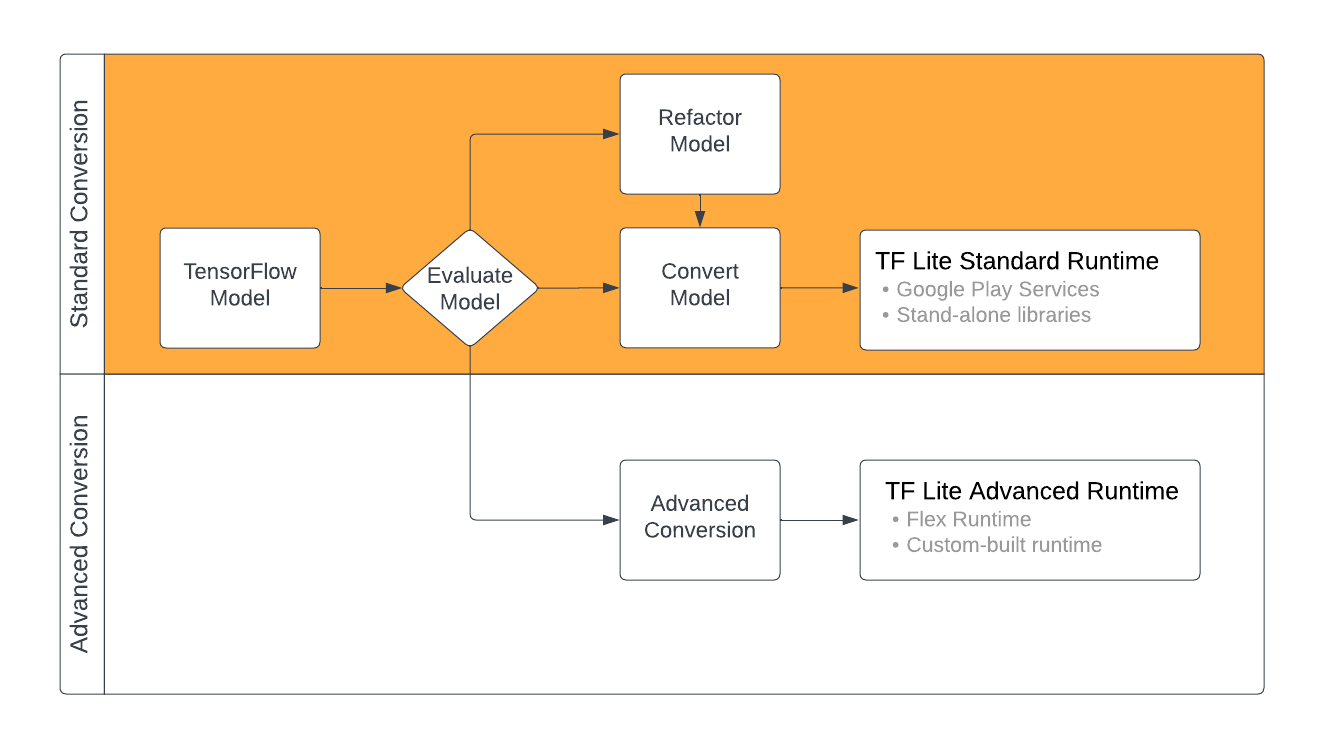

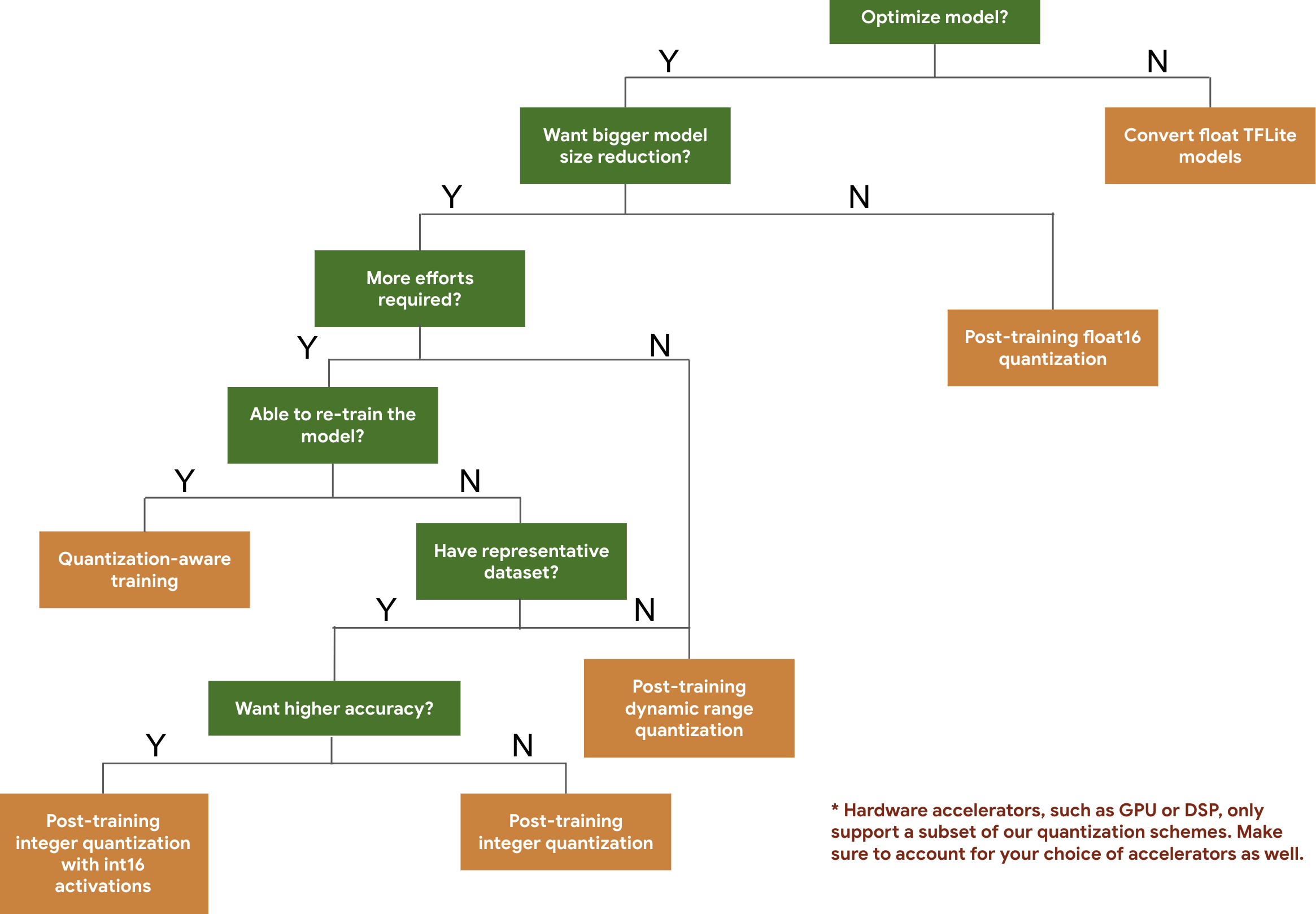

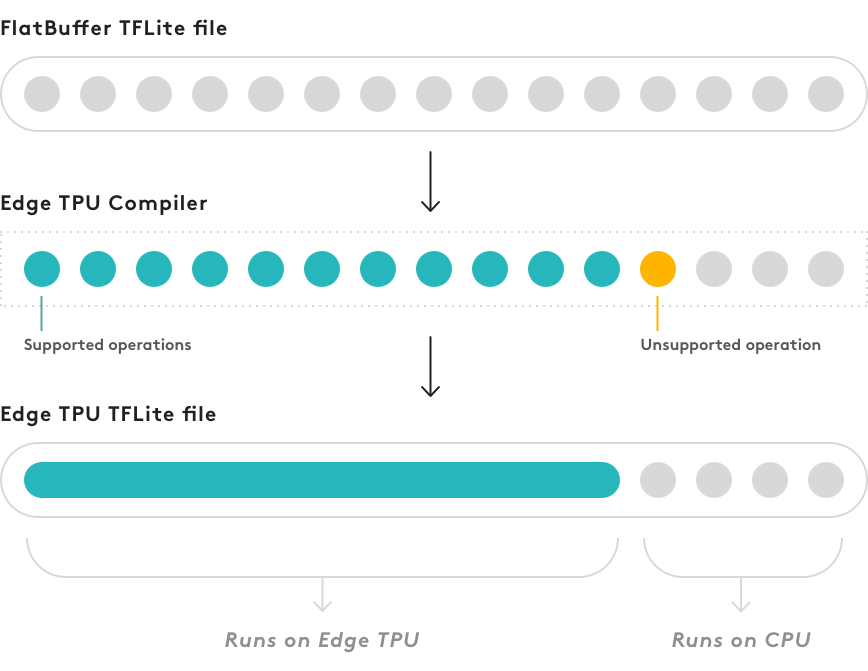

Quantization Aware Training with TensorFlow Model Optimization Toolkit - Performance with Accuracy — The TensorFlow Blog

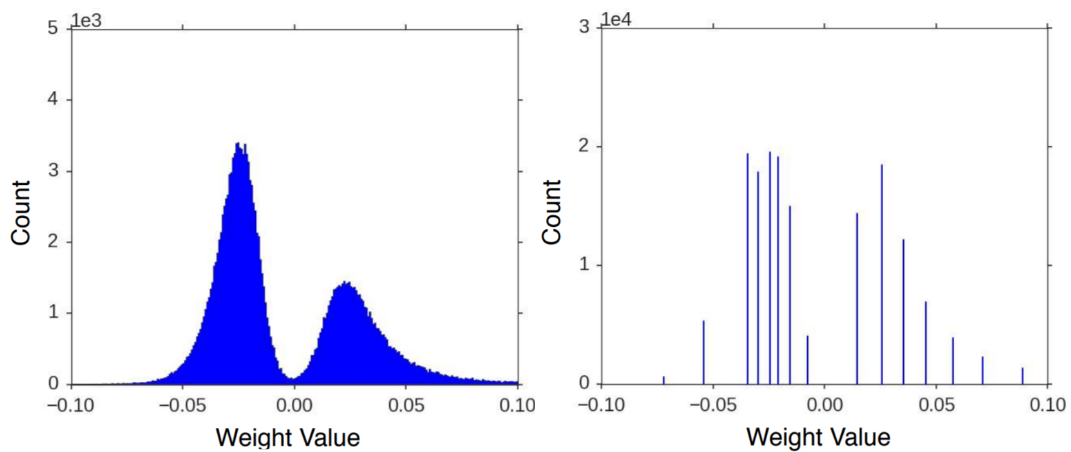

8-Bit Quantization and TensorFlow Lite: Speeding up mobile inference with low precision | by Manas Sahni | Heartbeat

8-Bit Quantization and TensorFlow Lite: Speeding up mobile inference with low precision | by Manas Sahni | Heartbeat

8-Bit Quantization and TensorFlow Lite: Speeding up mobile inference with low precision | by Manas Sahni | Heartbeat

Quantized Conv2D op gives different result in TensorFlow and TFLite · Issue #38845 · tensorflow/tensorflow · GitHub